Navigating the Future: 5 Key Pathways to Responsible AGI Development

Introduction Artificial General Intelligence (AGI) represents a revolutionary leap beyond today’s narrow AI systems. Unlike tools like Siri or ChatGPT that excel in specific tasks, AGI aims to replicate human cognitive abilities across domains—reasoning, decision-making, learning, and even moral judgment. This transformative potential brings both promise and peril. As AGI inches closer to reality, it becomes crucial to develop it responsibly, ensuring alignment with societal values, ethical standards, and technological scalability.

Understanding AGI and Its Evolution AI exists along a spectrum. At one end is Weak AI—systems that perform narrow tasks with precision, such as recommendation engines or voice assistants. At the other is Artificial Superintelligence (ASI)—hypothetical AI far more intelligent than humans. AGI stands in the middle, a balance of human-like cognition and adaptability.

AGI differs from:

-

Weak AI: Task-specific, non-adaptive systems.

-

Human-like AI: Mimics emotions and responses.

-

Human-level AI: Matches our reasoning capabilities.

-

Strong AI: Embodied consciousness.

-

ASI: Beyond-human comprehension and capabilities.

Understanding these categories helps frame AGI as the pivotal next step in artificial intelligence.

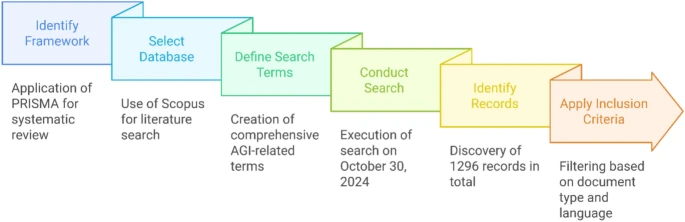

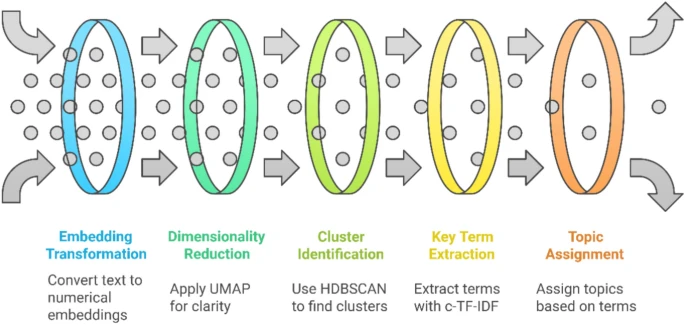

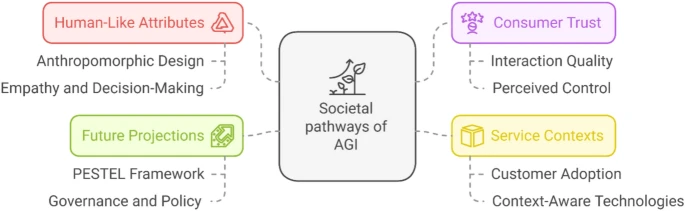

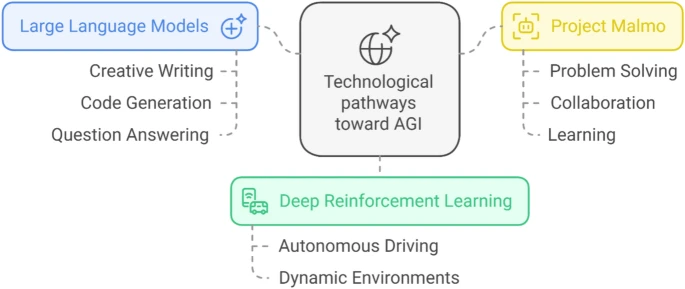

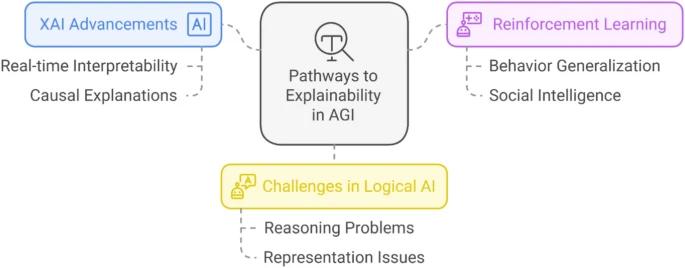

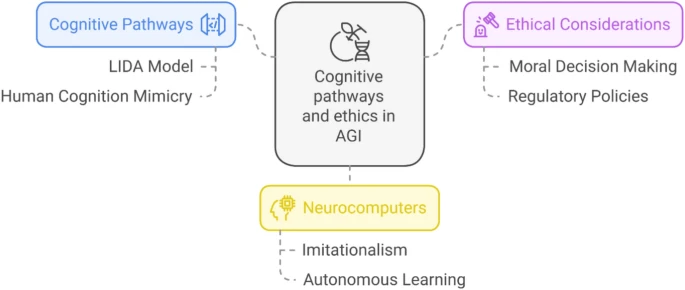

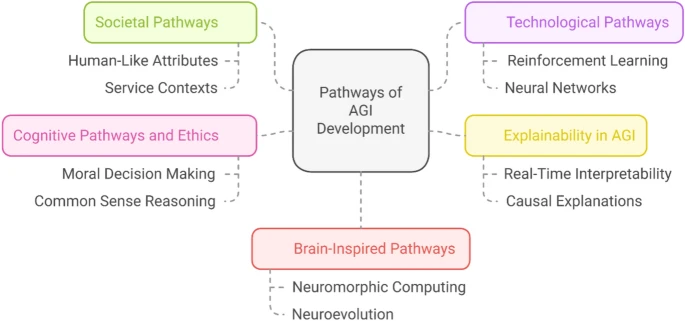

Five Key Pathways to AGI Development Researchers used BERTopic modeling to identify five strategic development pathways for AGI:

-

Societal Pathways: Examines how human-like AGI will be accepted in everyday life, focusing on empathy, trust, and governance.

-

Technological Pathways: Focuses on AI architectures, real-time learning, and task adaptability.

-

Pathways to Explainability: Targets transparency and trust by making AGI decisions understandable.

-

Cognitive and Ethical Pathways: Aligns AGI with moral frameworks and cognitive science.

-

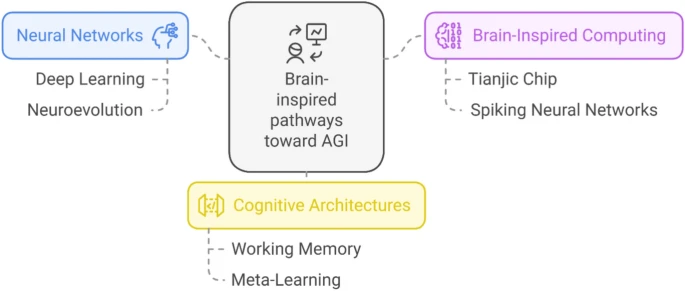

Brain-Inspired Pathways: Leverages neuroscience to make AGI more adaptive and efficient.

Societal Integration and Ethical Impacts People tend to trust human-like systems that display empathy and contextual understanding. However, over-reliance on AGI in emotionally sensitive roles (e.g., healthcare, education) can cause ethical concerns. Designers must consider the balance between realism and manipulation. The CASA theory and PESTEL framework suggest regulating AGI with structured oversight and public trust measures.

Technological Progress and Real-world Applications Technologies like deep reinforcement learning and large language models (LLMs) are already simulating human-like adaptability. Platforms like Project Malmo train AGI in dynamic environments, mimicking human trial-and-error learning.

But these systems come with challenges:

-

Energy consumption

-

Scalability

-

Regulatory gaps

We must develop AGI architectures that are both powerful and efficient.

Explainability and Trust in AGI Systems AGI must not be a black box. Explainable AI (XAI) tools like SHAP and LIME aim to make decisions interpretable. As AGI enters sectors like finance or medicine, ensuring transparency becomes vital.

Reinforcement learning, reward optimization, and logical reasoning are central to AGI’s growth, but their interpretability must evolve in parallel.

Cognitive Models and Ethical Reasoning AGI must mimic not only how humans think but also how we decide right from wrong. Cognitive models like LIDA and Global Workspace Theory simulate decision-making, attention, and memory. These are being used to embed moral reasoning into AGI.

As AGI assumes greater autonomy, we must ensure it respects privacy, cultural values, and ethical standards globally.

Brain-Inspired Systems in AGI Hybrid chips like Tianjic combine traditional and neuromorphic computing. Inspired by human brain structures, these chips enable multitasking, adaptability, and energy-efficient processing.

Neuroevolution—AI learning from biological evolution—enhances AGI’s ability to generalize and self-improve.

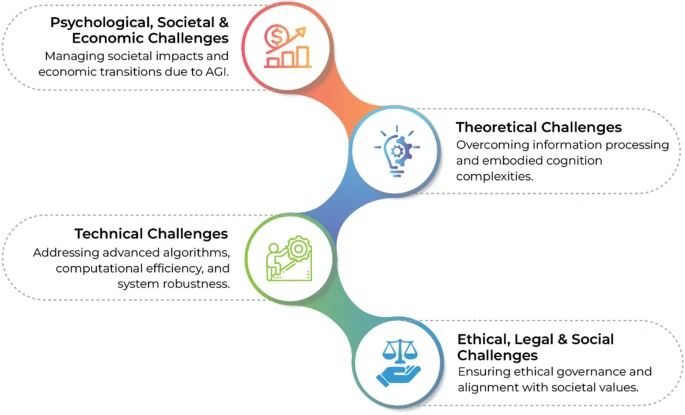

Challenges in AGI Development AGI faces multifaceted challenges:

-

Technical: Cross-domain generalization, computational limits

-

Ethical: Bias, fairness, accountability

-

Legal: Governance, liability

-

Psychological: Human-AI emotional relationships

-

Economic: Job displacement, access inequality

Robust frameworks for governance, regulation, and public awareness are urgently needed.

Implications for Policy, Practice, and Theory

-

Policy: Develop inclusive, safe, and accountable governance models.

-

Practice: Train workforces, implement transparent systems, and support ethical AI design.

-

Theory: Expand cognitive models and ethical frameworks to guide AGI evolution.

AGI must not just be powerful. It must be just, inclusive, and sustainable.

Conclusion: Guiding AGI Responsibly As we move from artificial intelligence to artificial general intelligence, the stakes rise dramatically. With vast potential come vast responsibilities. Only by integrating interdisciplinary research, transparent technologies, and ethical foresight can we guide AGI to serve humanity—not replace it.

Explore more articles like this on Career Cracker to stay ahead in the evolving tech landscape.

Hiring Partners